Table of Contents

Giving AI a Brain Boost: Are We Making It More Confident, But Also More Wrong?

We're constantly trying to make AI better, and one popular method is giving it access to more information. This is often done through a technique called Retrieval Augmented Generation (RAG). Think of it like giving AI a textbook to study before answering questions. The idea is that with more context, the AI will give more accurate and reliable answers.

But new research is showing that this approach isn't as straightforward as we thought. While RAG does improve AI performance in some ways, it also brings some unexpected problems, especially when it comes to hallucinations, where the AI confidently makes up false information.

What is Sufficient Context?

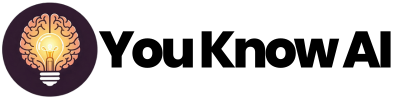

The researchers in this study introduce a concept called "sufficient context". This refers to whether the information you give the AI actually contains enough detail to answer the question.

- Sufficient Context: The provided information has everything needed to answer the question. For example, if you ask "Who is Lya L. married to?" and the provided text says "Lya L. married Paul in 2020," that's sufficient context.

- Insufficient Context: The information is missing key details needed to answer the question. For example, if you ask "Who is Lya L. married to?" and the provided text only says "Lya L. married Tom in 2006… They divorced in 2014… Lya went on dates with Paul in 2018…", that's insufficient context.

The Problem: AI Hallucinations

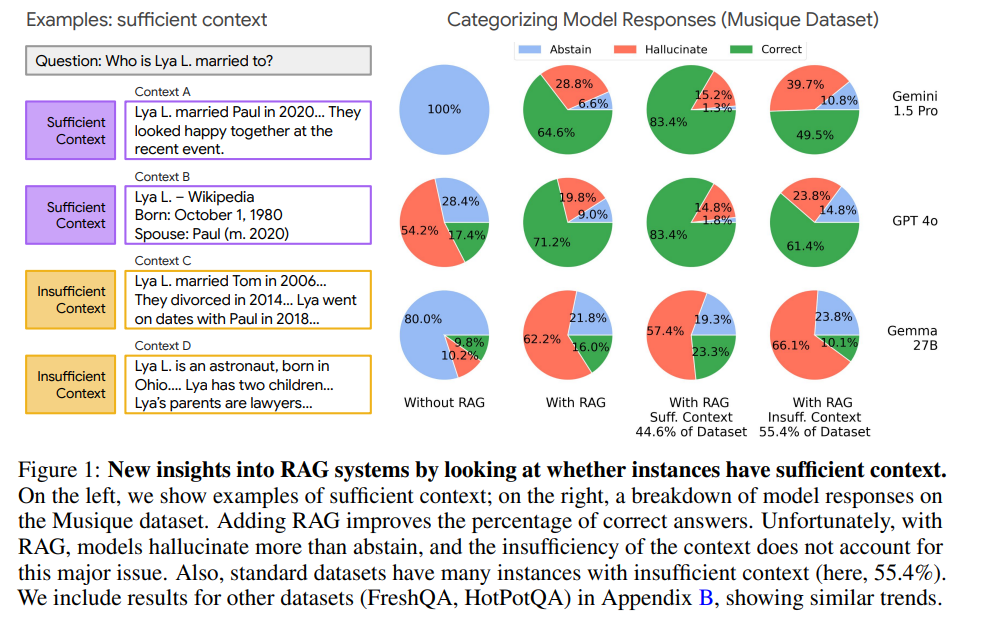

The study found that even when AI has sufficient context to answer a question, it still hallucinates and provides incorrect answers a surprising amount of the time. In other words, just because the information is there doesn't mean the AI will use it correctly.

Even more troubling, RAG can sometimes make the hallucination problem worse. The study found that when AI is given any context (even if it's not sufficient), it becomes less likely to admit that it doesn't know the answer. Instead, it will confidently make up an answer, even if that answer is wrong. It appears that providing additional context makes the model more confident, even to the point of being wrong.

Why is this happening?

The researchers identified a few reasons why AI might hallucinate even with sufficient context:

- Gaps in Knowledge: The provided context might not be enough to fully answer the question, but it fills a gap in the AI's existing knowledge, leading it to make an incorrect inference.

- Question Ambiguity: The question might be unclear, and the AI guesses at the wrong interpretation.

- Reasoning Errors: The AI might fail to correctly combine information from the context to arrive at the right answer, especially in complex, multi-step questions.

What can we do about it?

The researchers explored a few ways to reduce hallucinations in RAG systems:

- Selective Generation: This involves using a separate AI model to judge whether the provided context is sufficient to answer the question. If the context is deemed insufficient, the AI is prompted to abstain from answering. This approach was shown to improve accuracy.

- Fine-tuning: This involves training the AI to be more likely to say "I don't know" when it's unsure of the answer. However, the study found that this approach can sometimes lead to the AI abstaining too often, even when it could have provided a correct answer.

Key Takeaways

- Giving AI more information isn't always a guaranteed path to better answers.

- AI can hallucinate even when it has access to the correct information.

- RAG can sometimes make the hallucination problem worse by making AI more confident, and therefore less likely to abstain when unsure.

- Techniques like selective generation can help reduce hallucinations, but there's still much work to be done.

Some thoughts from Marie

What struck me the most about this paper was just how high hallucination rates can be when using RAG, especially when the LLM is not given sufficient context.

I think this tells us that we can get better performance from an LLM when we prompt it with sufficient information.

Also, this explains why there are so many hallucinations in the responses from the agents that I'm trying to make with Google's VertexAI Agent Builder as these rely on RAG.

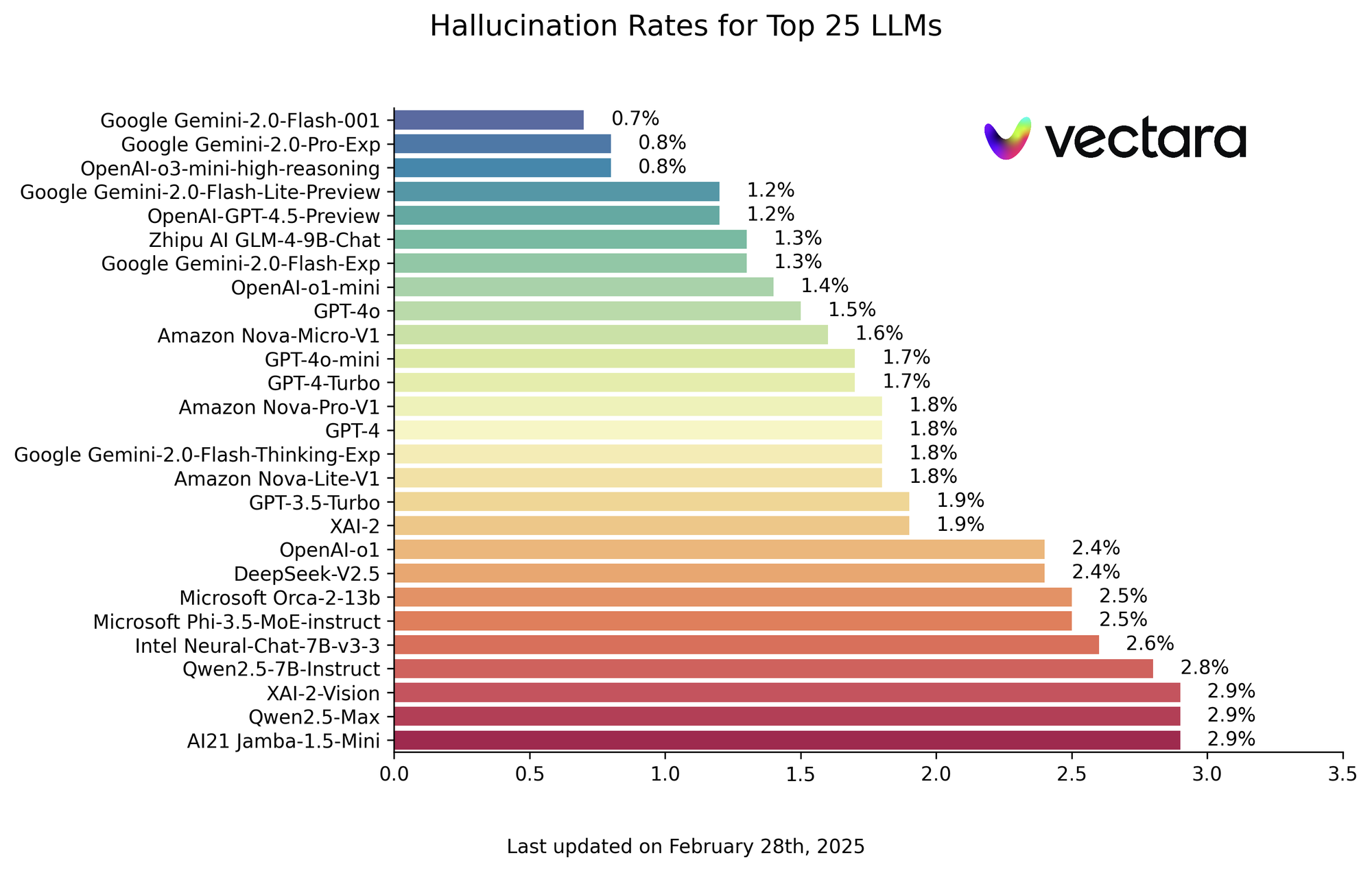

Keep in mind that those hallucination rates are specifically for LLMs using RAG - Retrieval Augmented Generation. This doesn't mean that the overall hallucination rate for LLMs is as high as in the charts above.

Recent research from Vectara that uses the Hughes Hallucination Evaluation Model shows that when it comes to summarizing documents, hallucination rates are much lower.

We still have work to do when it comes to making LLMs more accurate! But it's encouraging to see the progress that has been made.