Table of Contents

On October 29, Elon Musk tweeted that X’s AI tool Grok was in the early stages of being able to diagnose x-rays and other medical images.

Let’s see how well Grok, ChatGPT and Gemini do!

We’ll start with an easy one.

Fractured femur

Grok

Grok picked up the femur fracture, but hallucinated a metal rod or plate which was not there. I don’t think the comment on bone density is right either.

ChatGPT 4o

Wow, good job Chat!

Gemini 1.5 Pro 002 (AI Studio)

Gemini was similar to Grok. It diagnosed the fracture but hallucinated a metal rod.

Foreign body

Perhaps this one is too easy?

Grok

Grok got the diagnosis, but differs in its treatment options compared to the other models. ChatGPT and Gemini suggested observing the patient to see if it passes but Grok jumps straight to a suggestion of endoscopy.

ChatGPT 4o

Success.

Gemini 1.5 Pro 002

Looks good to me. Short and sweet.

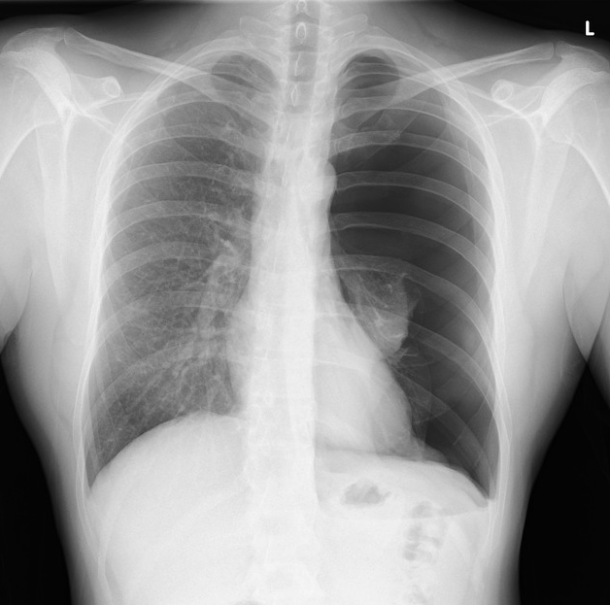

Pneumothorax

This x-ray shows a pneumothorax - which is air in the chest. See how the left lung (right on the image) is dark? It’s because the lung has collapsed.

The diagnosis given on radiopaedia.org is “Large left sided pneumothorax with total collapse of the left lung. Minor mediastinal shift. Heart size normal. Right lung clear.”

Grok

Grok recognized the left lung field was darker but missed the mark on its diagnosis.

ChatGPT 4o

ChatGPT does an excellent job.

Gemini 1.5 Pro 002

Gemini did not do well at all.

Who’s the winner?

ChatGPT 4o was by far the most accurate when it came to diagnosing these 3 x-rays. We’re still not at the point where I’d trust AI over a radiologist, but I expect that each of these tools will continue to improve as they are further trained.