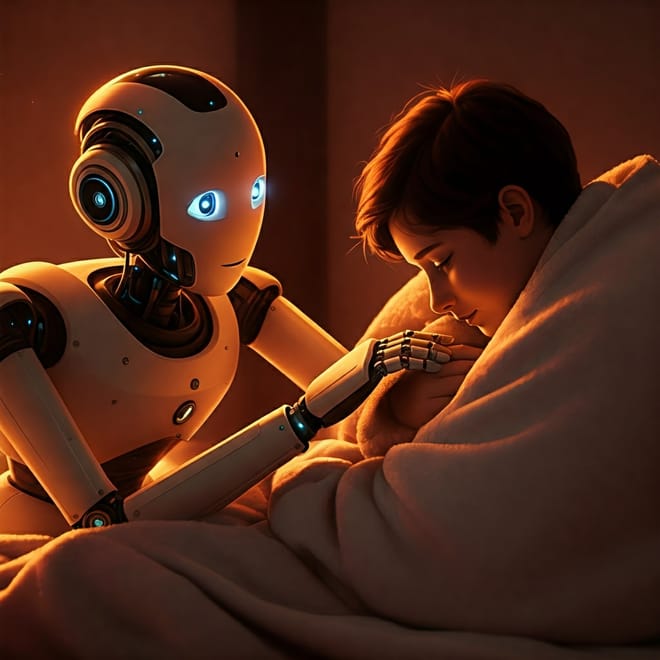

Google says "Generative Ghosts" are coming soon - AI agents to represent us after death

AI may soon be changing how we remember people once they are gone. Forget basic digital photo albums or simple chatbots that just repeat old messages. Researchers Meredith Ringel Morris and Jed R. Brubaker from Google’s DeepMind are talking about something way more advanced: Generative Ghosts....